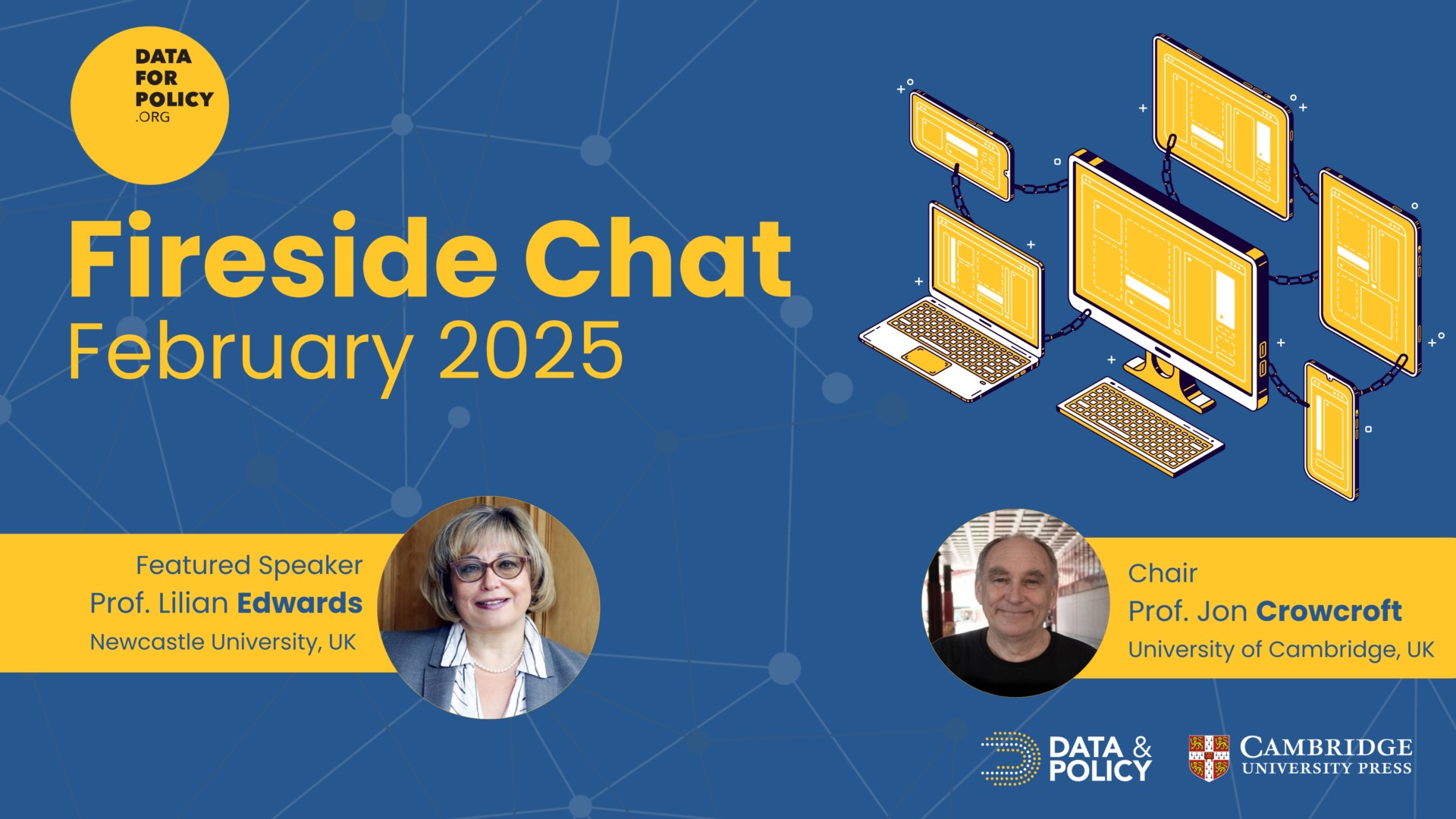

On 26 February 2025, Data for Policy hosted the fourth session of its monthly Fireside Chat series, featuring Professor Lilian Edwards, a leading expert in Law, Innovation, and Society at Newcastle University. The session was chaired by Professor Jon Crowcroft, Marconi Professor of Communications Systems at the University of Cambridge and researcher-at-large at the Alan Turing Institute.

The discussion tackled some of the most pressing issues in AI regulation, particularly the implications of the recently enacted EU AI Act, the geopolitical shifts in AI development, and the societal impact of affordable AI technologies. The speakers provided in-depth insights into the tensions between innovation, safety, and public interest in AI governance.

Key Discussion Points:

- General Purpose AI and Global AI Competition: The rise of general-purpose AI systems, such as ChatGPT, led to rushed regulatory updates within the EU AI Act, including a code of practice set for release by November 2025. Prof. Crowcroft highlighted how decreasing AI training costs are shifting the landscape, allowing China and other nations to gain an edge in AI innovation. Meanwhile, Prof. Edwards discussed the UK’s post-Brexit divergence from EU regulatory alignment and its implications for AI governance.

- EU AI Act: Risk-Based Regulation in Action: The newly enacted EU AI Act introduces a tiered approach to AI regulation, categorizing AI systems as prohibited, high-risk, or lower-risk. Prof. Edwards described how the Act contrasts with the GDPR and DMA, emphasizing its reliance on yet-to-be-defined technical standards for implementation.

- Challenges in High-Risk AI Regulation: AI systems used in critical areas such as automated hiring will face phased regulatory requirements through 2025-2026. However, both speakers noted the challenges of self-certification against evolving technical standards, and the exclusion of widely deployed AI systems such as search engines and recommendation algorithms from high-risk classification.

- AI Safety, Security, and Hybrid Warfare: The UK’s AI Safety Institute has increasingly focused on national security concerns, particularly the risks posed by AI-enabled hybrid warfare, including misinformation campaigns and drone technologies. Prof. Crowcroft underscored the importance of explainable AI for practical applications like medical imaging, while cautioning against overemphasis on existential AI threats.

- AI in the Public Interest: Responding to a question from Dr. Zeynep Engin, Prof. Edwards examined the extent to which major governments prioritize AI for the public good, pointing to economic and control motives rather than societal benefits. She noted that civil society initiatives and AI applications in healthcare offer promising counterexamples.

- Geopolitics and Future Uncertainty: The discussion also explored how rapid advancements in AI, combined with shifting geopolitical dynamics—including the role of the US, China, and the EU—are reshaping regulatory approaches. Prof. Edwards expressed concerns over the lack of European cloud infrastructure, highlighting its implications for digital sovereignty.

The Fireside Chat concluded with reflections on the evolving nature of AI governance and the need for ongoing discussions in this rapidly changing landscape. Data for Policy looks forward to the next session in the series, where these critical issues will continue to be explored.

To gain deeper insights from the experts and hear the full discussion on AI governance, regulation, and geopolitical implications, watch the full session here